Daniel Fine

Media Clown

Digital Media and Live Performance

Co-Principal Investigator, Co-Creator, Co-Producer, Director

Role:

Peer-Reviewed Outcomes/Dissemination:

November 2022

Silmultaneous in-person (Iowa & Las Vegas) and virtual performance (internet) at Live Design International (LDI). This was the first time LDI ever had a live-performance as a panel.

June 2021

Workshop virtual/online performance of new research. Supported by grant funding from Provost Investment in Strategic Priorities Award at UI and Epic Mega Grants. ABW Media Theatre, University of Iowa.

June 2019

International Premiere Performance: Prague Quadrennial, Czech Republic, largest international exhibition and festival event dedicated to scenography, performance design and theatre architecture. Of over one-hundred and thirty applications from around the world, Media Clown was one of forty-three selected performances by a jury led by international designer Patrick Du Wors.

May 2019

Invited Performances: Four week residency at Backstage Academy, West Yorkshire, UK.

June 2019

Invited guest lecture at the School of Art, Design and Performance at the University of Portsmouth

October 2019

Invited presenter for public lecture series at The Obermann Center for Advanced Studies.

October 2020

Peer Reviewed Research Team Panel Presentation: Live Design International. (Canceled due to Pandemic)

October 2019

Peer Reviewed Acceptance for a Poster Presentation at the 2019 Movement and Computing Conference (Declined)

The total amount of grants received to date is $102,050.00.

Major grants of note include:

-

-

- $25,000.00 external grant from Epic Mega Grants, a highly competitive grant to “support game developers, enterprise professionals, media and entertainment creators, students, educators, and tool developers doing amazing things with Unreal Engine or enhancing open-source capabilities for the 3D graphics community”

- $18,000.00 development grant for Interdisciplinary Research from the Obermann Center at UI

- $2,000.00 Provost Investment in Strategic Priorities Award at UI.

-

Photo Gallery:

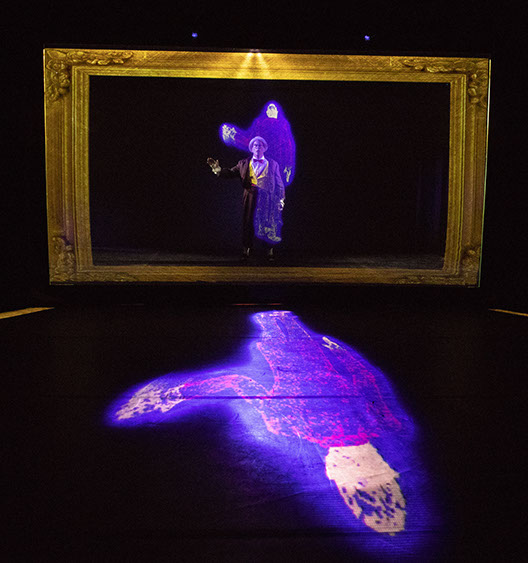

First draft of promotion photos for PQ2019. Photo by Courtney Gaston.

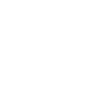

3D previsualization in Disguise media server for PQ2019.

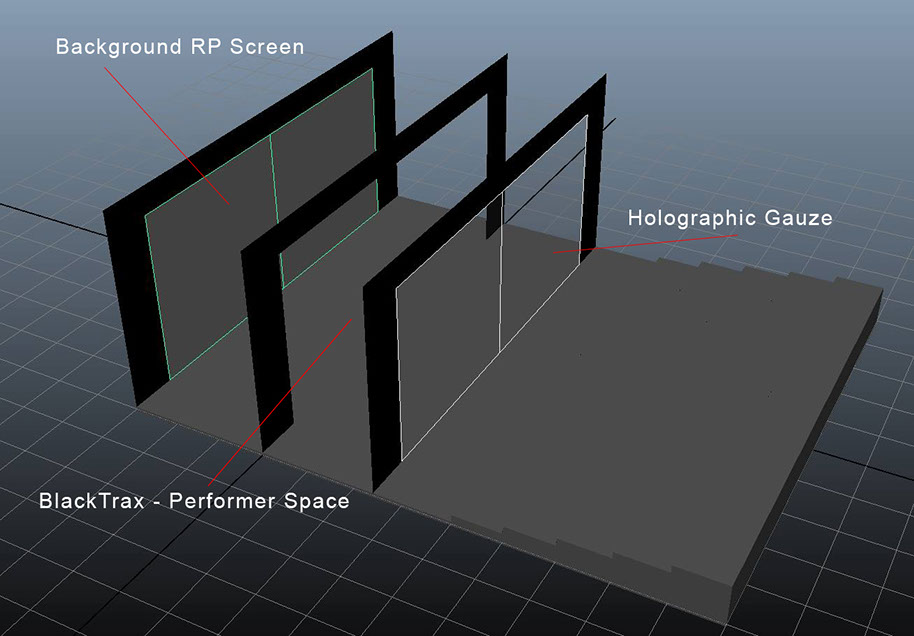

Early motion capture and projection tests.

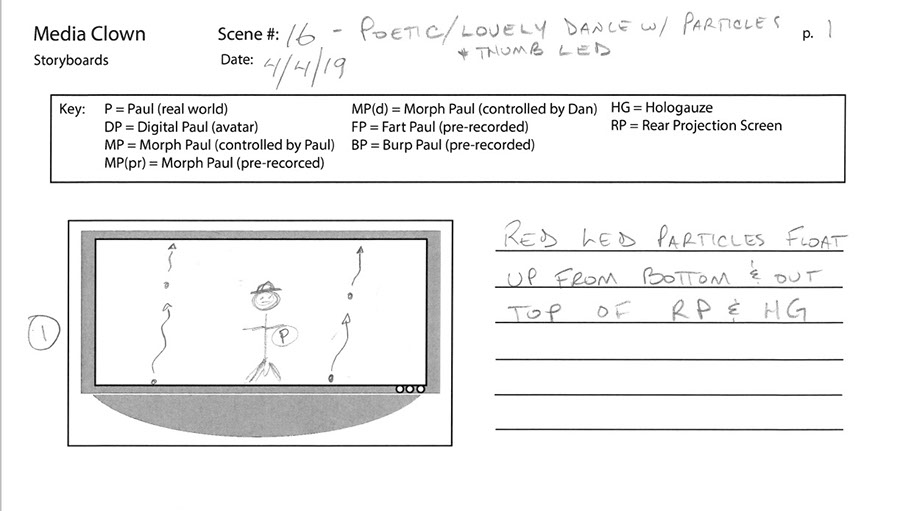

Storyboard created by Daniel Fine for particle sequence. PQ2019.

Setting up the motion capture suit at Backstage Academy. Photo by Daniel Fine

The Iowa team at PQ2019. PIs and graduate students.

The PIs in front of a poster advertising their upcoming talk at Portsmouth University, UK.

Backstage Academy Performance. Photo by Daniel Fine

Backstage Academy Performance. Photo by Daniel Fine

PQ Performance. Modern twist on classic Duck Soup routine. Photo by Dana Keeton

Stuck inside an iPad. PQ19 Performance. Photo by Dana Keeton

Particles swirl and moving lights auto-match color/intensity of projections. PQ19 Performance. Photo by Dana Keeton

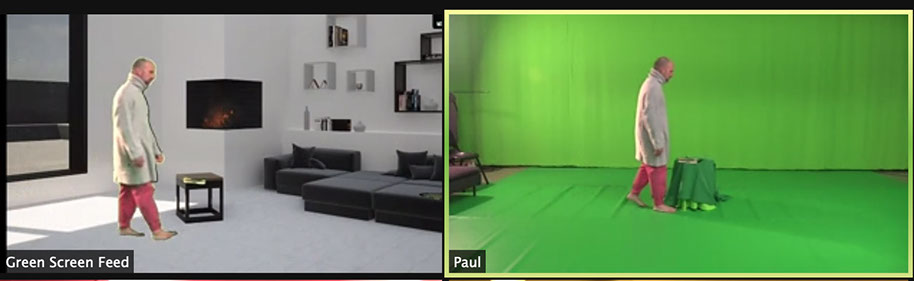

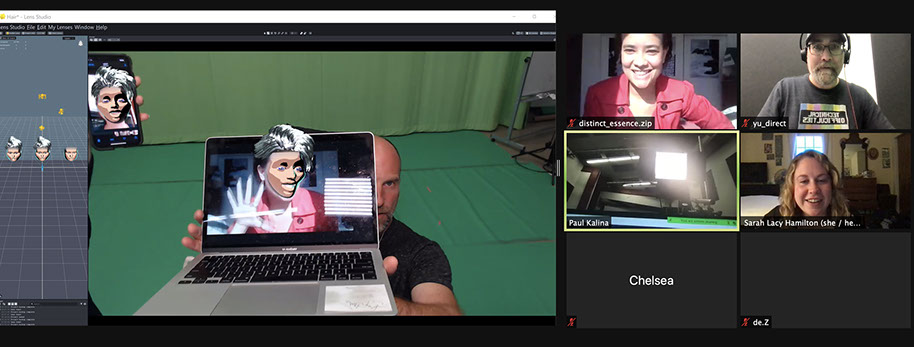

Early tests for virtual performance on Zoom. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream.

Early tests with compositing using Isadora.

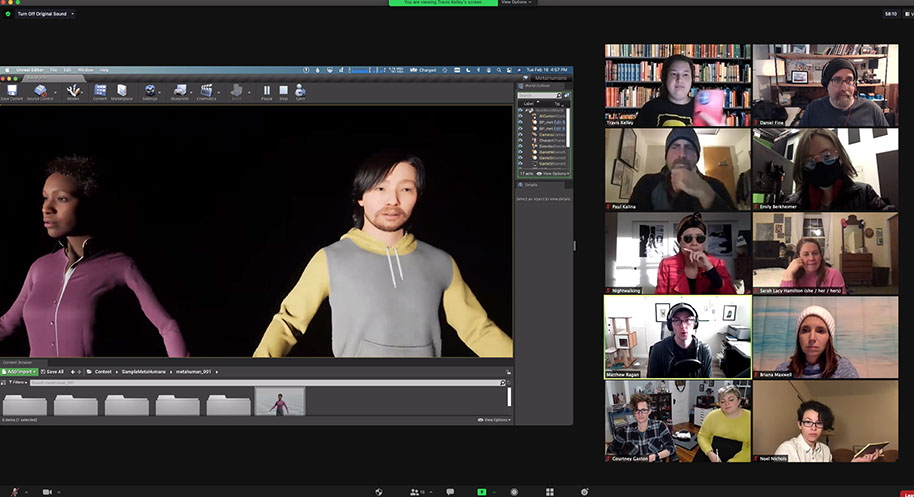

Testing Unreal's MetaHuman as an option for MoCap avatar control for the character of de.Z.

Early test using SnapCam's Lens Studio as an AR option for MoCap control of the character de.Z.

Early test using SnapCam's Lens Studio as an AR option for MoCap control of the character de.Z. Clowning with the facial recognition.

Test using SnapCam's Lens Studio as an AR option for MoCap control for ilve audiences' video feeds.

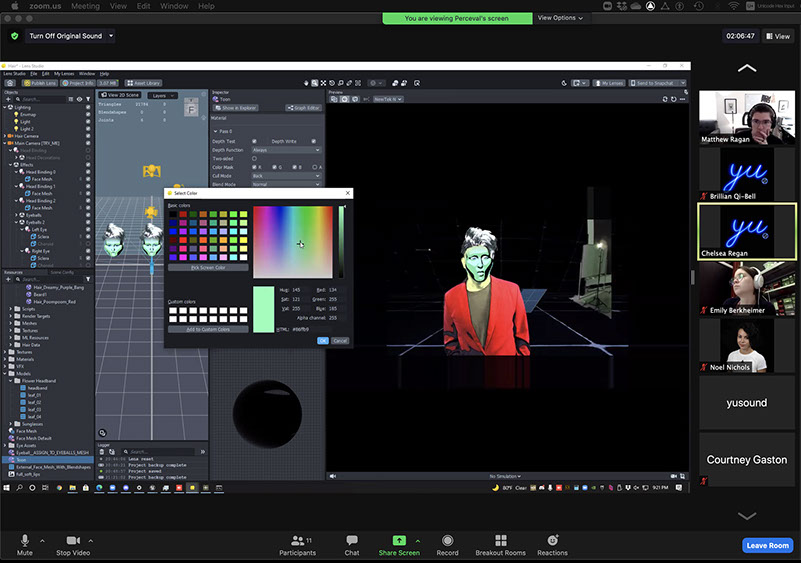

Tweaking parameters on SnapCam's Lens Studio for AR character de.Z.

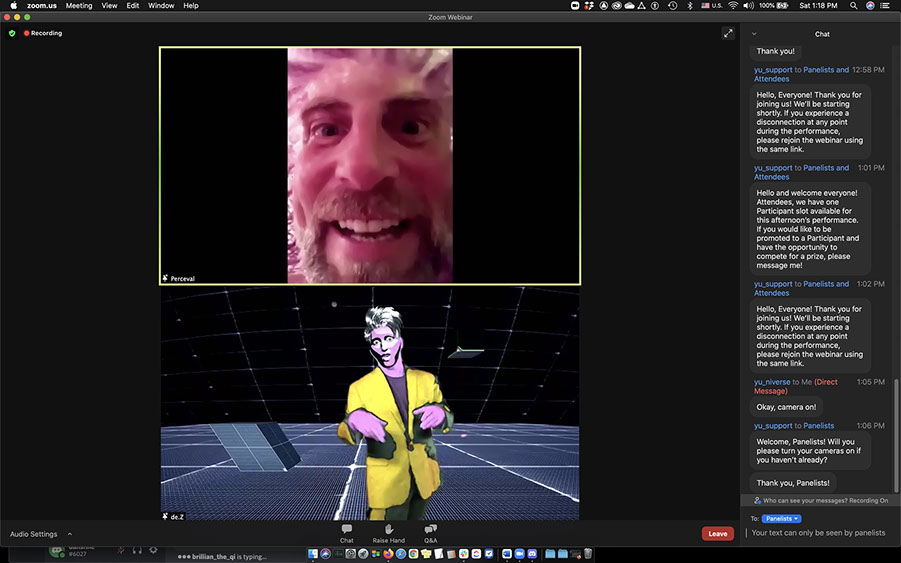

June 2020 online/virtual workshop. Precording of Paul playing character of Percy (top) and Paul (live) playing character of de.Z (bottom).

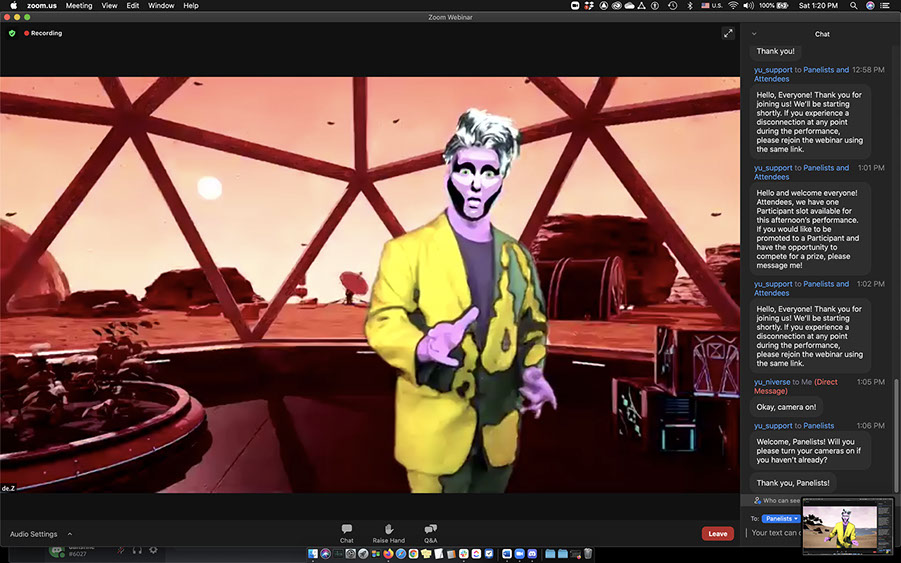

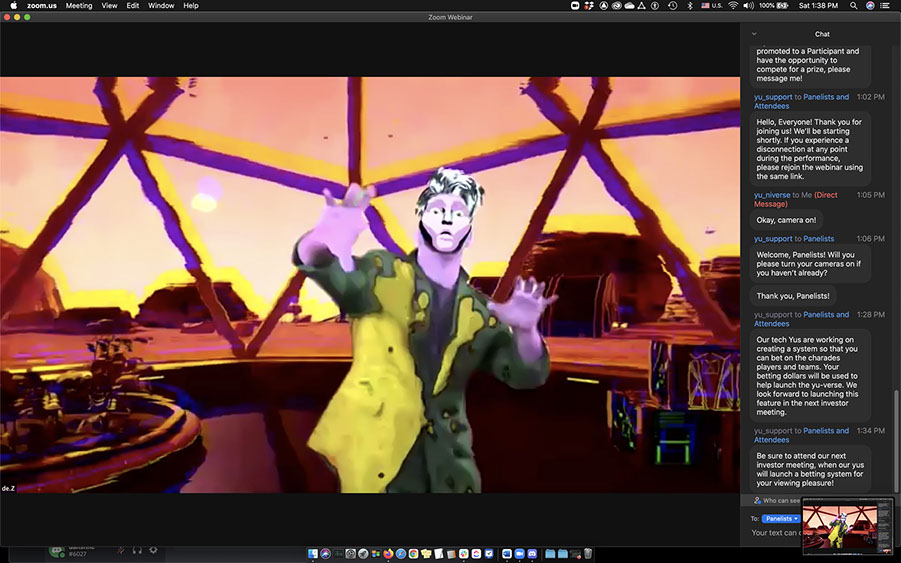

de.Z in a different background. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream. June 2021 virtual/online workshop performance.

de.Z in a different background. Background created in UnReal and ported to Touch Designer, where it is composited with the live video stream. June 2021 virtual/online workshop performance.

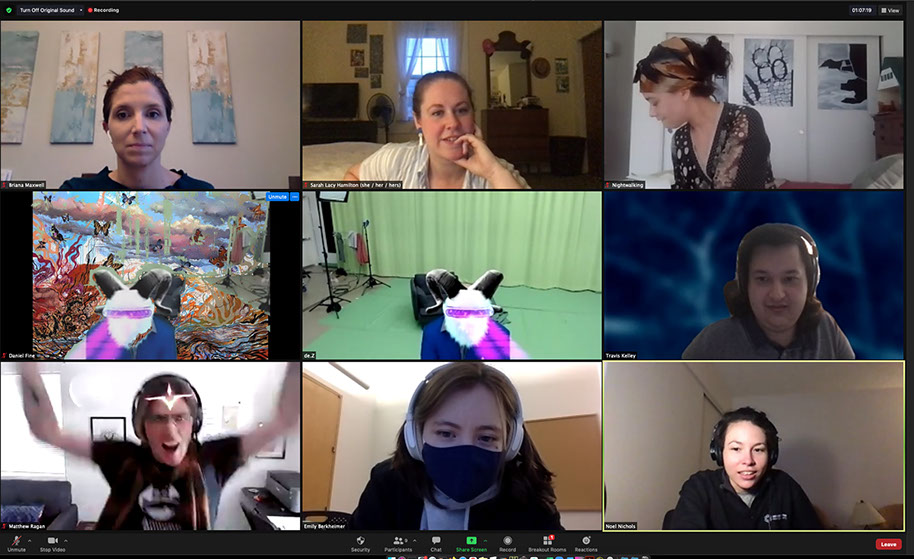

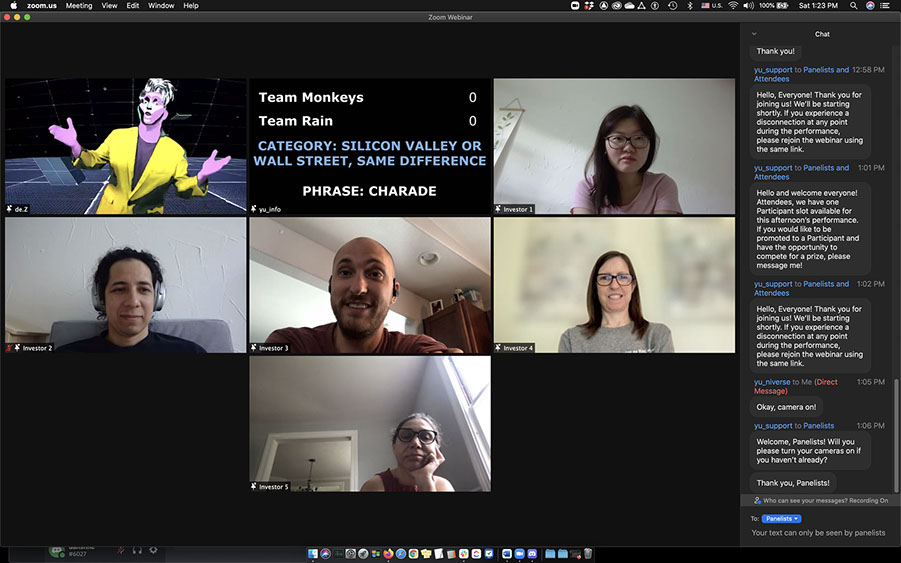

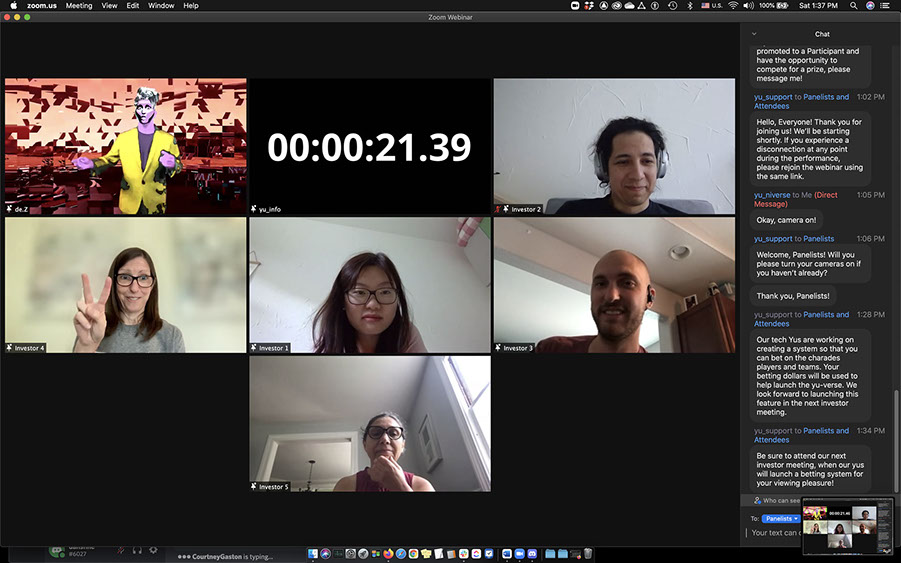

de.Z playing charades with audience members in Zoom. June 2021 virtual/online workshop performance.

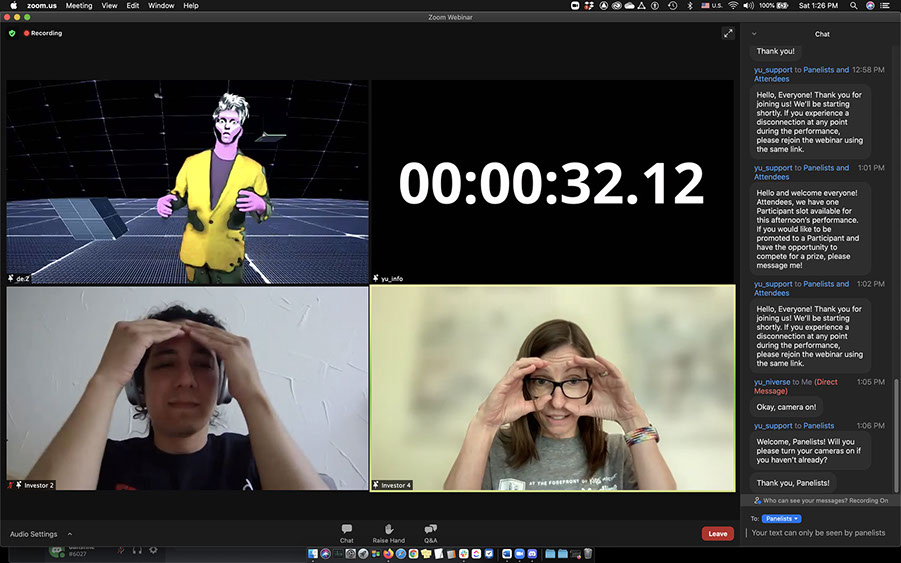

Audience members playing charades. June 2021 virtual/online workshop performance.

June 2020 online/virtual workshop performance,

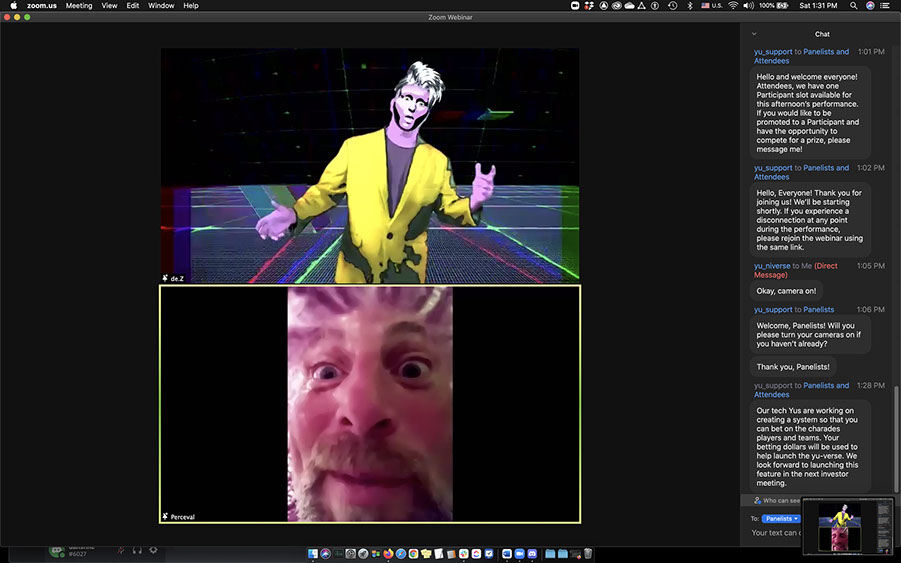

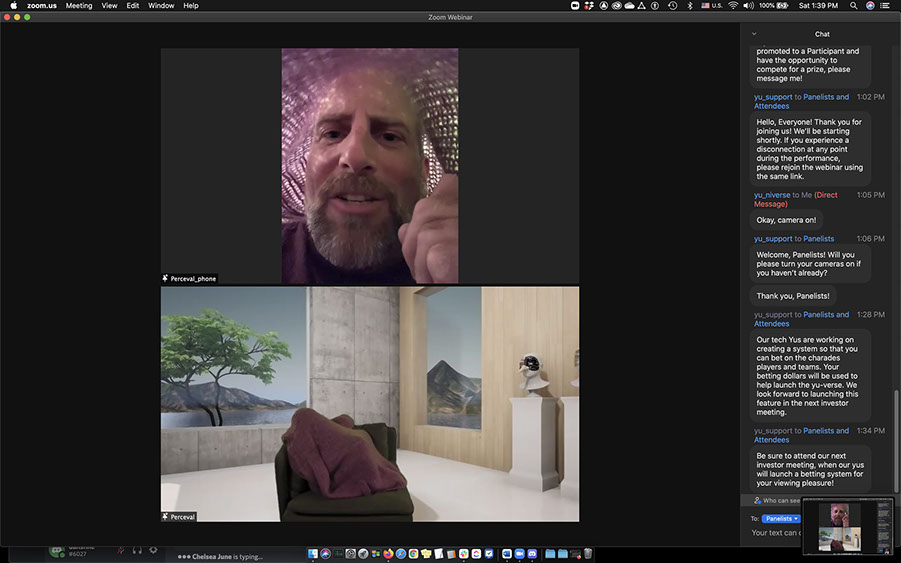

Precording of Paul playing character of Percy (bottom) and Paul (live) playing character of de.Z (top). Real-time RGB affect applied to video via a custom software controllable by an operator on a website. June 2021 online/virtual workshop performance,

Close-up of de.Z with AR filter. June 2021 online/virtual workshop performance,

Real-time decay affect applied to video (top left) via a custom software controllable by an operator on a website.

June 2021 online/virtual workshop performance,

Real-time RGB affect applied to video via a custom software controllable by an operator on a website.

June 2021 online/virtual workshop performance,

Paul playing the character of Percy. Live video-feed inside the sweater (top) with a cellphone dialed into the Zoom call and the main camera view (bottom). June 2021 online/virtual workshop performance,

Percy talking directly to audience. Background: his projected world.

June 2021 online/virtual workshop performance,

Percy talking directly to audience. Background: his real world (still a real-time background in Unreal).

June 2021 online/virtual workshop performance,

14 - 31

<

>

Videos:

Prague Quadrennial 2019 Performance:

Virtual Performance Workshop June 2021:

Backstage Tour June 2021 Virtual Performance:

Backstage/Final Composite June 2021 Virtual Performance:

Key Creative Team:

Co-PI, Co-Creator, Co-Producer, Performer: Paul Kalina

Co-PI, Co-Creator, Director of Technology: Matthew Ragan

Co-Creator, Lead Writer: Leigh M. Marshall

Co-Creator, Co-Head of Art Department, Costume & Character Design: Chelsea June

Co-Creator, Co-Head of Art Department, Physical & Virtual Set & Lighting Design: Courtney Gaston

Co-Creator, Associate Director, Director of Marketing & Alternate Storytelling: Sarah Lacy Hamilton

Head of Audio & Sound Design & Integration: Bri Atwood

Associate Director of Technology, System Integration: Travis Kelley

Assistant Director of Technology & Lens Studio Character Development: Emily Berkheimer

Stage Manager: Jordan Jones

About:

Media Clown (think Keaton, not Bozo) is an ongoing, devised research project that includes live, digital, and hybrid in-person performances. This interdisciplinary research team has members from theatre, art, engineering, and computer science, with collaborators from Industry working with UI faculty, staff, current students and alumni. The purpose of the project is to integrate the newest digital technologies, specifically motion capture, Augmented Reality and real-time systems, with traditional/theatrical clown techniques. How does the analog clown remain relevant in the digital age and interact with audiences using digital technologies? What is the nature of the codependency between live, physical performance and live digital media systems? How can clown blur the lines between real and imaginary spaces while holding up a mirror to our advanced technological society?

The clown’s history and importance to community is diverse and spans the ages - from the shaman clowns (Heyokas) of the Hopi and Lakota tribes, to the court jester, to the clowns of Vaudeville (Abbott and Costello, The Marx Brothers) to the current clowns of Cirque du Soleil and those on stage like Bill Irwin and David Shiner (Fool Moon, Old Hats). The clown exists to join us to our shared humanity. Emerging and digital technologies are changing how we connect with our communities and in today’s rapidly changing world of entertainment, the centuries old artform of the analog clown is losing relevance to a modern audience. Yet the clown has always evolved with the times, from the streets to the circus ring to the theatre and eventually film (Buster Keaton and Charlie Chaplin) and television (Lucille Ball and Carol Burnett). In order for "the clown [to remain] a recognizable figure who plays transformative and healing roles in many diverse cultures” (Proctor), there is a need for the clown to evolve yet again for the native digital-generation.

One artistic strength of the clown lies in their ability to response to an audience in real time. The performer must read the audience and shift on a dime to tailor the performance on any particular night in order to create a communal experience. For this reason, live clown shows have avoided immersing their performances in technology because it was not facile enough to respond to audience reactions and make each performance unique. Media Clown tackles this problem by working with motion capture and real-time systems in order to eliminate this obstacle and expand the traditional performance space beyond the stage to include audience space, shared virtual spaces, audience control of digital avatars and other special digital media effects. According to contemporary performance scholar Adrian Heathfield, performance has shifted aesthetically from “the optic to the haptic, from the distant to the immersive, from the static relation to the interactive.” Media Clown explores the underlying ideologies at the heart of this paradigm shift by using motion capture, Augmented Reality, Virtual Reality, and new integrated real-time digital media systems to create interactive, hybrid digital/physical spaces, which allows audiences to become directly engaged and physically involved in the story, thus connecting them with the clown and each other through technology.

Read a full history of the project here.

copyright 2024